Alibaba’s Qwen3 model family just received an upgrade with the recent release of Qwen3 Max. It’s designed for high-throughput, instruction-following, and long-context reasoning, and positioned as a trillion-parameter-class model with a focus on multilingual engineering workflows, expanded context windows, and optimized code-generation fidelity. Given that, and the recent release of the GPT-5 codex variant, it’s a good idea to draw some curious comparisons. This article offers a detailed coding showdown between the Qwen3-Max, GPT-5, and Claude Opus 4.

Let’s get going.

Qwen3 Max vs GPT-5 vs Claude Opus 4 – High-level comparison approach

To evaluate these models for engineering teams and developers, we examine: (1) architecture and scale signals, (2) token and context limits, (3) coding-specific benchmark positioning, (4) tooling and IDE/agent integration, and (5) long-horizon multi-file refactors and agentic workflows. Let’s start with the architectural specifics.

Architecture, scale, and training signals

Qwen3 Max. Positioned by Alibaba as the top tier in the Qwen3 family, Qwen3 Max emphasizes large-scale capacity, multilingual pretraining, and engineering-oriented fine-tuning to improve code completion and long-context reasoning. Public project trackers and provider pages describe it as closed-source with API access and multiple context configurations suitable for repository-scaled tasks.

GPT-5. OpenAI presents GPT-5 as a unified, cross-capability model that advances coding, reasoning, and multi-modal understanding. A key architectural and product emphasis is adaptivity: GPT-5 can prioritize quick responses or spend longer internal “thinking” to solve complex programming problems. OpenAI also released a Codex-specialized variant (GPT-5-Codex) that’s tuned for agentic coding workflows, code review, and tight IDE/toolchain integrations.

Claude Opus 4 (and Opus 4.1). Anthropic’s Opus line is explicitly targeted at software engineering workflows. Opus 4 was released with leading positions on coding benchmarks like SWE-bench and Terminal-bench; later point releases (Opus 4.1) improved multi-file refactoring precision and long-horizon agentic behaviour. Anthropic advertises “conservative” edit behavior intended to reduce regressions during automated refactors.

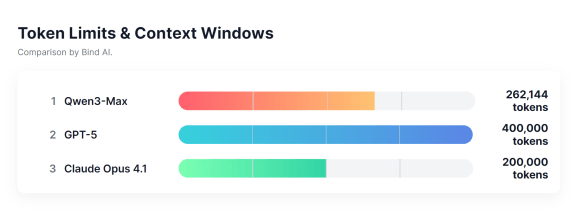

Token limits and long-horizon behavior

Qwen3 Max. Vendor documentation highlights expanded context windows for long files and multi-file projects; exact token caps depend on chosen configuration from providers. Qwen often ships in multiple context sizes, so pick the option that aligns with your repository and CI artifact sizes.

GPT-5. Marketed with adaptive thinking time and large context support; Codex variants are explicitly designed to manage long sessions and long tool-call chains. For engineering use, this means improved cross-file reasoning within a single session and more reliable agentic tool orchestration.

Claude Opus 4. Anthropic emphasizes sustained multi-hour performance and stability for long-running engineering tasks. Opus 4.x is tuned to maintain style and correctness across thousands of tokens and to make surgical, precise edits during multi-file refactors.

Qwen3 Max vs GPT-5 vs Claude Opus 4 – Benchmarks and coding fidelity

Benchmarks change quickly, and vendor leaderboards provide an initial signal but not the whole picture.

- Vendor leaderboards. Anthropic published SWE-bench/Terminal-bench numbers positioning Opus 4 near the top for coding tasks. OpenAI’s GPT-5 (and GPT-5-Codex) is positioned as their most advanced coding model with explicit improvements for code review and agentic execution. Qwen3 Max is claimed to improve on prior Qwen3 metrics for coding, logic, and instruction following. These vendor statements are informative but should be validated with workload-specific tests.

- Real-world task differences. You’ll observe distinct behavior across three axes: single-file synthesis (algorithms, helper functions), multi-file orchestration (refactors, dependency updates), and long-horizon automation (agents running tests, CI integration). GPT-5-Codex tends to excel at interactive, tool-heavy loops and quick prototyping; Claude Opus 4/4.1 is unusually strong at conservative multi-file edits and long refactors; Qwen3 Max aims to deliver balanced multilingual performance and enterprise scale. Independent benchmarking on your actual test suites is essential to choosing a winner for your use case.

Tooling, integrations, and developer UX

GPT-5. Deep integration across OpenAI’s Codex tooling, VS Code extensions, and agent frameworks gives GPT-5 an advantage if you rely on tight IDE coupling, automated code reviews, test orchestration, and real-time pair programming. The Codex flavor emphasizes safety gating and permissioned access for sensitive repositories.

Claude Opus 4. Anthropic focuses on API stability and agent builders; Opus 4.1 highlights multi-tool orchestration and a lower propensity to introduce bugs during automatic edits. If you plan to run autonomous agents that manage ephemeral CI tasks, PRs, and changelogs, Opus 4.x is explicitly optimized for that style.

Qwen3 Max. Delivered through cloud partners and regional providers, Qwen3 Max is attractive for enterprises that need hosted control, regulatory locality, or multilingual support. Integrations are maturing, and Qwen variants are becoming a pragmatic choice for APAC and enterprise workflows.

Qwen3 Max vs GPT-5 vs Claude Opus 4 – Pricing comparison

Smart Pricing Input/Output Comparison

| Model | Input Price | Output Price | Notes |

|---|---|---|---|

| Qwen3-Max | $0.86–$2.15 | $3.44–$8.60 | Tiered: 0–32K ($0.86/$3.44), 32–128K ($1.43/$5.73), 128–252K ($2.15/$8.60). |

| GPT-5 | $0.625–$2.50 | $5.00–$20.00 | Batch ($0.625/$5.00), Standard ($1.25/$10.00), Priority ($2.50/$20.00), up to 400K context. |

| Claude Opus 4.1 | $15.00 | $75.00 | No tiers; premium real-time reasoning, caching discount exists. |

Latency and pricing depend heavily on context window, requested throughput, and whether you use hosted APIs or cloud-attached managed services. GPT-5’s Codex is oriented toward interactive low-latency loops; Anthropic’s Opus line emphasizes reliability for long agentic runs; Qwen3 Max targets enterprise API access with regional deployment options. Evaluate cost per token and per hour of continuous agent runs when estimating production bills, especially for long-horizon workloads.

Which model for which coding workload?

- Quick prototyping / pair-programming: GPT-5 (Codex) — best interactive tooling and tight editor integrations.

- Large refactors / multi-file style enforcement: Claude Opus 4 / 4.1 — conservative edits, precise multi-file behavior, and high benchmark scores on SWE-bench.

- Enterprise multilingual pipelines / region-sensitive deployments: Qwen3 Max — scalable context, multilingual strengths, and cloud provider partnerships.

Practical tips for teams

- Add metadata to model-generated commits (model version, prompt template, seed, token usage) for reproducibility.

- Gate model changes behind CI: require model-generated PRs to pass static analysis and human review.

- Use smaller context windows for short tasks to reduce cost; reserve large contexts for design and refactor sessions.

- Prefer conservative edit prompts (explicitly restrict allowed changes) for multi-file refactors — this aligns with Anthropic’s Opus 4 edit philosophy.

- Track regression drift and keep a baseline of deterministic tests so you can compare model variants over time.

Beyond raw capability, consider observability and reproducibility. Instrument model-driven commits with metadata (model version, prompt template, seed, token usage) so you can trace regressions and reproduce results; store generated code diffs alongside test runs. For mission-critical systems, run a blue/green deployment where model-generated changes are first applied to a shadow environment that executes full CI pipelines and fuzz-testing before merging. Finally, measure not just functional correctness but also maintenance cost (how much manual cleanup model outputs require), and monitor for latent vulnerabilities introduced by automated edits.

The Bottom Line

Qwen3 Max, GPT-5 (and its Codex specialization), and Claude Opus 4.1 each push the frontier of coding assistants, but with different tradeoffs. GPT-5 emphasizes integrated, agentic developer workflows and interactive coding; Claude Opus 4 prioritizes safe, conservative multi-file engineering and long-horizon tasks; Qwen3 Max competes on scale, multilingual capability, and enterprise deployment patterns. For teams, the right answer is workload dependent: rapid prototyping and tight IDE workflows favour GPT-5/Codex; surgical refactors and minimization of regressions favour Claude Opus 4.x; regionally constrained or multilingual enterprise pipelines may find Qwen3 Max the better fit.

You can try GPT-5, Claude Opus 4.1, and other advanced models here.