Anthropic calls its newly released Claude Sonnet 4.5 the best coding model in the world. It’s a refined upgrade over the Opus line, with a focused agenda: to excel at long-running autonomous agent workflows, software engineering tasks, and enterprise-level safety. Benchmarks show it topping across various fields, reasoning, and math included, and what’s good is that it costs just as much as the Claude Sonnet 4. (which you can try here) This article offers a detailed and insightful Claude Sonnet 4.5 vs GPT-5 vs Claude Opus 4.1 comparison to clear up the noice and find out which model is best for coding in 2025.

Claude Sonnet 4.5 Overview – A Worthy Upgrade?

Claude Sonnet 4.5 embodies a measured but meaningful step forward in Anthropic’s model lineup. It refines the balance between performance, reliability, and cost, with particular attention to longer task continuity and improved benchmark scores. While not a radical departure from earlier releases, it delivers enough consistency and capability upgrades to stand out as a practical choice for organizations already invested in the Claude ecosystem. Let’s understand more about it through these points:

- Endurance & continuity: In demos, Sonnet 4.5 has been shown running continuous agentic or coding workflows for 30+ hours without losing coherence or derailing. That’s a big leap compared to prior models.

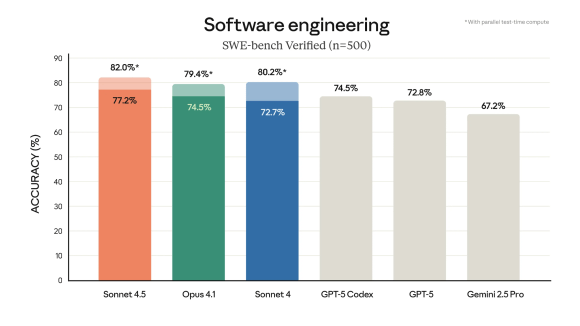

- Benchmark gains: It leads on OSWorld (real computer task benchmark) with a score of ~61.4% vs ~42.2% for prior Sonnet versions. It also improves on SWE-bench Verified and reasoning/math tasks.

- Same pricing as previous Sonnet: According to Anthropic, the pricing remains unchanged at $3 per million input tokens and $15 per million output tokens.

- Better alignment & safety behavior: Anthropic claims reduced tendencies toward sycophancy, deception, power seeking, delusional thinking, and prompt injection vulnerabilities. It is released under their AI Safety Level 3 (ASL-3) protections.

- Integration & availability: Sonnet 4.5 is deployed on Amazon Bedrock, Google Vertex AI, and in Anthropic’s own Claude product line, making it more widely available to enterprise customers.

- Technical improvements: It features improved context management (especially for long conversations), better tool orchestration (e.g., automatically clearing old tool histories), and parallel tool execution in some settings.

In short: Sonnet 4.5 is the version of Claude to bet on if you want a model that can think and act autonomously for longer time horizons, while preserving safety, coding strength, and enterprise readiness — all without raising token prices.

Claude Sonnet 4.5 vs GPT-5 vs Opus 4.1 – Detailed Coding Comparison

Let’s break this down across all the axes that matter: features, performance, safety, cost, ecosystem, developer experience, and real-world suitability.

Feature & Capability Comparison — (Table)

Here’s a side-by-side feature comparison to help us get oriented.

| Critia | Claude Sonnet 4.5 | Claude Opus 4.1 | GPT-5 |

|---|---|---|---|

| Intended positioning | Long agentic workflows, enterprise, coding + safety | General high-end Claude, coding, multi-step tasks with strong reasoning | Broad general-purpose, high steerability, product integration |

| Agentic endurance | Designed for marathon runs (30+ h demos) | Strong but less emphasized for extreme durations | Good tool chaining and agent support, but public claims less about multi-day runs |

| Context window | ~200,000 tokens (plus internal management) | ~200,000 tokens (typical Claude context) | Reported up to ~400,000 tokens (depending on version/tier) in some comparisons |

| Coding / SWE benchmarks | Leading in SWE-bench Verified, strong in OSWorld tasks | Very strong in coding/refactoring and reasoning tasks | Competitive or slightly ahead in many coding/algorithm tasks per independent benchmarks |

| Steerability / personality control | Conservative defaults, alignment tuning, strong safe fallback | Classic Claude system control, moderate steerability | Richer knobs for verbosity, minimal reasoning, persona, memory control |

| Safety & guardrails | Emphasis on reduced misaligned behavior, prompt injection defenses, ASL-3 classification | Good safety pedigree in the Claude line; robust but not marketed as extreme safe frontier | OpenAI’s safety layers, moderation filters, but sometimes tradeoffs at edges |

| Tool orchestration & memory | Supports automatic cleanup, memory management, parallel tool calls | Strong multi-step tool orchestration, memory features in Claude lineage | Native agent and memory support; good integration with OpenAI tooling |

| Integration & availability | Bedrock, Vertex, Claude platform, SDK (Agent SDK) | Available via Anthropic API, Bedrock, Vertex | Native in ChatGPT products, OpenAI API, deep integration with Microsoft stack |

| Latency & throughput | Engineered for sustained runs and throughput; per-token latency may not be minimal | Balanced for typical usage | Optimized for consumer and product speed |

| Common use cases | Autonomous agents, long pipelines, coding + safety-critical tasks | Multi-file refactors, complex reasoning, large workflows | Versatile: from chat assistants to coding, product features, creative tasks |

Some observations from the table:

- Sonnet is more specialized toward endurance, safety, and long workflows.

- Opus remains a reliable all-rounder in the Claude family with strong coding/logic capabilities.

- GPT-5’s strength is breadth: flexibility, steerability, integration, and more aggressive performance in varied use cases.

Claude Sonnet 4.5 vs GPT-5 vs Claude Opus 4.1: Pricing Comparison

One of the trickiest comparisons is cost, because pricing is tokenized (input/output) and varies by tier, cache, and other factors. But here is a snapshot of public or semi-public pricing data (with caveats):

| Model | Claude Sonnet 4.5 | Claude Opus 4.1 | GPT-5 |

|---|---|---|---|

| Input Token Price | $3 per million tokens | $15 per million tokens | ~$1.25 per million input (standard tier) |

| Output Token Price | $15 per million tokens | $75 per million tokens | ~$10 per million output (standard tier) |

| Notes / Tiering / Cache / Savings | Same as Sonnet 4 pricing. | As per Claude pricing table (Opus line) | From public comparisons and API pricing estimates |

From these numbers, the rough ordering is:

- GPT-5 is the cheapest (per token) among the three in most conventional settings.

- Sonnet 4.5 is midrange — much cheaper than Opus, but more expensive than GPT-5 in token cost.

- Opus 4.1 is at the premium end, particularly for output tokens.

Performance, Latency, and Efficiency

- In real developer benchmarks comparing GPT-5 vs Opus 4.1, some reports show GPT-5 using far fewer tokens and being faster for typical day-to-day dev tasks. For example: converting Figma design to code, GPT-5 (Thinking) cost ~$3.50 vs Opus 4.1 cost ~$7.58 in one experiment.

- One blog states that GPT-5 is “slower and more expensive but consistently more accurate” in multi-step reasoning vs Opus 4.1, which suggests tradeoffs depending on task type.

- In some cost comparisons, Opus 4.1 is shown to cost ~$22.50 (for 1 M in + 100k output) vs GPT-5 at ~$2.25 — a very large multiple difference.

- Others caution that token usage differentials vary by prompt style, domain, verbosity settings, and tool chaining logic.

Thus, token cost alone is a rough guide — real performance and “cost per task” matter much more.

Safety, Hallucinations & Alignment

- Sonnet 4.5 puts particularly heavy emphasis on safer behavior: reduced sycophancy, delusion, power seeking, prompt injection resilience, and alignment to user intent. Those claims are baked into the launch narrative.

- Opus 4.1 carries the safety legacy of Claude models; it’s strong in compliance, document analysis, and enterprise settings. It may be somewhat less “frontier safe” than Sonnet, but is robust.

- GPT-5 has standard OpenAI safety and moderation frameworks. In many cases, it allows more aggressive or permissive outputs (depending on system message configuration). Some critics point out GPT-5 occasionally has hallucination or verbosity tradeoffs.

- In heavily regulated or high-stakes domains (finance, legal, healthcare), Sonnet’s alignment-heavy pitch might make it safer by default, whereas GPT-5 may require more guardrail design from developers.

Developer Ergonomics, Tooling & Ecosystem

- Sonnet 4.5 gets extra support via Claude Agent SDK (the same infrastructure powering Claude Code) to help developers build agentic workflows more easily.

- It also features intelligent context window management (e.g. when context gets long, it gracefully truncates responses rather than erroring), and tool usage cleanup to reduce token bloat.

- Opus 4.1 fits naturally into the Claude ecosystem, and benefits from mature integrations in Anthropic’s API, Bedrock, Vertex, and prior toolchains.

- GPT-5 has the advantage of being the “default” in many product stacks: it’s baked into ChatGPT, has strong SDKs, integrates with existing OpenAI tooling, and has rich steerability control in many frameworks.

- For developers, switching between prompts, memory, chaining, and tools is often easier in GPT ecosystems simply because of maturity and adoption.

Real Use Cases & Fit

To choose between these, think in terms of what you want to build. Here are scenario matches:

- Unattended autonomous agents or long pipelines (e.g. automated code maintenance, long data processing, multi-stage tool orchestration): Sonnet 4.5 is the leading candidate because of its endurance claims and agent enhancements.

- Large software projects / multi-file refactoring / codebase intelligence: Opus 4.1 or Sonnet both shine; Opus is steady and trusted, Sonnet offers the advantage of newer improvements and endurance.

- High-velocity product features, chat assistants, mixed creative + coding tasks: GPT-5 gives more flexibility, product leverage, and lower token cost (for many use cases).

- Safety-critical or highly regulated domains: Sonnet’s strong alignment positioning could reduce the burden of oversight; Opus is safe but perhaps not as “frontier aligned” as Sonnet; GPT-5 may require more safety scaffolding.

- Cost-sensitive, scale workloads: GPT-5 likely wins on many task-level cost comparisons, especially where token usage is high, provided error / retry rates are manageable. Sonnet might win when its endurance or orchestration complexity saves developer time versus stitching many GPT calls.

Bind AI’s Recommendations

- If your chief requirement is long, uninterrupted agentic workflows with minimal drift and strong safety posture, start with Sonnet 4.5. Its claim to sustaining 30+ hour workloads is a practical game-changer, and if that works in your environment, it can simplify architecture.

- If your focus is robust, general-purpose coding, analytical reasoning, or multi-step project tasks, Opus 4.1 remains an extremely solid choice (especially if you already use Claude stack).

- If your workload mixes chat, product features, creative tasks, coding, memory, and integration depth — and cost per token matters heavily — GPT-5 is still a compelling, versatile pick.

Make sure before you commit to a model you:

- Run real-world tests (your codebase, your data) for multi-file tasks, error recovery, and drift over time.

- Compute cost per completed task (not just token cost) by including retries, human corrections, and orchestration overhead.

- Test safety and adversarial edge cases in your domain (prompt injection, hallucination, governance).

- Check latency, throughput, and infrastructure fit (does your cloud provider support the model natively?).

- Evaluate integration complexity (agent SDKs, memory, tooling, logs, debugging).

The Bottom Line

Claude Sonnet 4.5 is clearly Anthropic’s bet on long-haul autonomy and safer enterprise deployments, while Opus 4.1 continues to serve as a steady all-rounder for code and reasoning. The right choice depends on your priorities: cost per task, safety posture, or ecosystem integration. You can try GPT-5, Claude Sonnet 4, and other advanced models here.